In this article

Deepfakes are no longer just some weird tech curiosity; they’re appearing in finance approvals, executive comms and helpdesk workflows right across Aussie organisations.

Deepfake videos, made using AI algorithms like generative adversarial networks, can have a pretty dramatic impact on organisations by making it super easy for scammers to impersonate people and rip them off.

Attackers are using AI to clone voices, control video calls and come up with convincing stories at very low cost. There was a case back in early 2024 where a multinational got scammed to the tune of millions after staff were tricked into a deepfake video call where every “colleague” was a fake created by AI – that’s how far social engineering’s come with AI.

Australia’s anti-scam data shows the overall fraud problem is still measured in billions. The combined losses reported across major channels in 2024 was $2.03 billion – and that’s a reminder that failing to verify is still a very expensive exercise even when overall scam losses year-on-year are trending down.

“Deepfakes are not a problem from some sci-fi future. They are a real verification problem for today.”

– Ashish Srivastava

Getting the word out is key, and organisations need to adopt a complete approach to deepfake detection and response to avoid getting caught out by these evolving threats.

TL;DR

- Detection: Use signal-level analysis, model classifiers and provenance. Just remember results are probably going to be probabilistic.

- Protection: Get your approvals and identity workflows sorted before you go buying tools.

- Response: Freeze the money movement fast, preserve the evidence and get your lawyers and PR team on the same page.

If you need some hands-on help building verification workflows or evaluating deepfake defences, talk to our Cyber Security teams in Perth or Sydney.

Introduction to deepfakes

Deepfakes are a rapidly evolving technology that use advanced machine learning algorithms to make ridiculously realistic fake images, videos and audio recordings. The term “deepfake” just combines “deep learning” and “fake”, which is pretty self-explanatory.

These algorithms hoover up huge datasets of real images, voices and movements and then just reproduce them to make fakes that are almost impossible to tell from the real thing. As this tech gets more accessible the threat to organisations goes up, deepfakes can be used to spread misinformation, manipulate public opinion, and just generally mess with trust in digital info.

Understanding how deepfakes are made and why they’re so convincing is the first step in truly understanding the threat environment we’re in today.

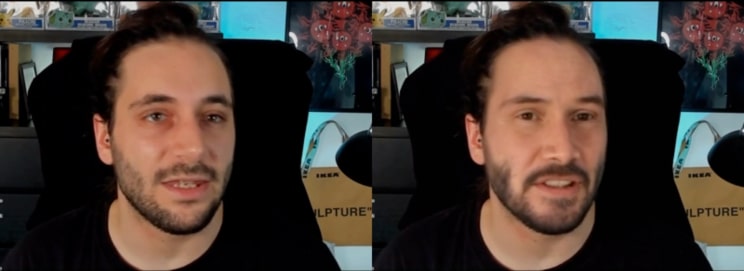

On the detection side, researchers are working on new algorithms that can spot the subtle clues that deepfakes leave behind like inconsistencies in lip syncing, or unnatural facial expressions. The field is constantly evolving, and both creators and defenders are racing to stay ahead.

As a result, organisations need to be on top of their game when it comes to detecting the latest threats.

What counts as a deepfake these days?

Practically, you’ll be seeing four types:

- Voice cloning for high-pressure approvals and password resets, often using AI generated voices. You need to be able to spot AI generated voices to stop scammers and prevent unauthorised access.

- Live video puppeteering to impersonate execs on video conferencing platforms.

- Image fabrication for reputational damage or ID proofing bypass.

- Text-assisted pretexts where AI writes you some really convincing scripts and messages.

Scammers are now copying a target’s voice from just a few seconds of audio. Being able to detect AI generated voices is the key to stopping impersonation of real people and stopping scammers from using fake voices for their own gain.

Where malicious deepfakes are hitting Australian organisations

- Finance: supplier onboarding, bank details changed out of process, and approvals getting made without going through the normal channels.

- Executive comms: urgent requests, market-moving statements, and media inquiries all the way out of left field.

- HR, Legal, Brand: When staff are coerced, fabricated statements are made, and image-based abuse occurs, and there’s a risk of handing over sensitive information to attackers who use deepfakes for coercion or blackmail.

- IT/Security: Help desk phone resets, VIP account recovery, social engineering – all the usual stuff that makes our jobs interesting.

Detection approaches that actually help

Signal-level forensics

Look for where the audio, video just doesn’t sound right. Prosody mismatch, spectral artefacts, lip-sync errors. Trouble is, even trained eyes can miss it, and it’s not always easy to spot. Useful after the fact, but not so great in real-time.

Model-based classifiers

These API-first detectors can scan all sorts of media across different channels. Advanced AI is used to analyse the content and give you a heads up if there’s a deepfake. Just don’t expect it to be 100% perfect, drift and false positives will be a problem, so be prepared.

Provenance and signing

C2PA Content Credentials and the Content Authenticity Initiative provide a sort of digital watermark that shows whether AI tools were used. This means you can be a bit more confident when verifying media authenticity. However, it’s not 100% foolproof… some platforms strip out the proof, so you can’t rely on it as a sole solution.

Biometrics and liveness

Pair it with out-of-band verification before granting permission for high-risk stuff like password resets or approvals.

Live vs post-event

Figure out where latency is acceptable. For call centres and comms, real-time voice analysis can give you a warning, but it’s got to be paired with some decent policy controls.

Protection stack: process before pixels

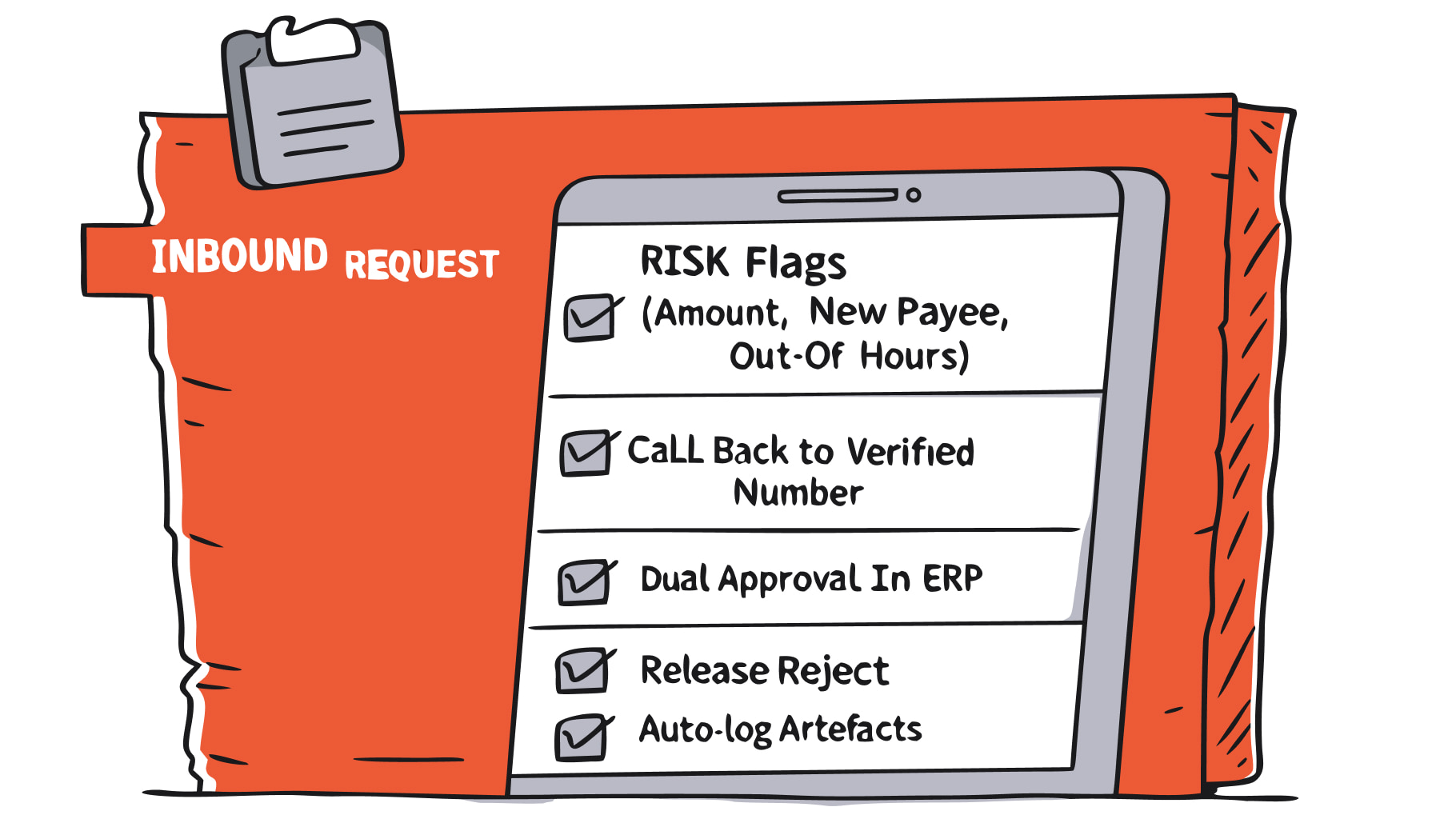

Verification workflows

- Payments and vendor changes: we insist on a call-back to a verified number on file, dual control, and no approvals via voice or chat alone. No exceptions.

- Executive requests: require an internal code phrase or a scheduled call from a company directory number. Don’t even think about authorising anything via Teams or a phone call.

- Help desk: for VIPs, no voice-only resets, ever. Require step-up MFA and a ticket in the queue.

If your organisation gets a lot of phone calls, you’re particularly vulnerable to deepfake attacks via voice cloning, so you need to secure your verification workflows to stop the bad guys getting at you.

Identity basics

Get your MFA sorted, enforce some sensible conditional access, and dial back the admin privileges a bit. Map it all to the ACSC Essential Eight maturity model so you can keep track of progress and improvements are auditable and incremental.

Comms policies

Make sure your reception and EAs have some basic scripts to follow. And make double sure to never, ever authorise a media request or an urgent wire transfer via Teams or a phone call alone.

Data Governance

Keep a tight lid on high-quality voice and video from execs to limit exposure and cut the risk of it getting out. Keep a list of who to contact to get that stuff taken down, and make sure you have templates to make the process smoother.

For key finance items, we recommend implementing double process identification, where a secondary, independent team member confirms the identity of the requester.

– Ashish Srivastava

Deepfake detection tools and choosing the right ones

Categories you’ll be dealing with

- API-first detectors that score audio, video and images. But make sure you go for a complete solution that can detect AI generated voices, as well as manipulated video and images – you want to be protected across all media types.

- Comms add-ons for contact centres and conferencing platforms.

- Media forensics suites for investigations, takedowns and litigation support.

What to look for when selecting a tool

- Modality coverage and accuracy metrics – are we talking apples for apples here.

- Can it run in real time? And what’s the SLA on latency?

- Data handling and privacy posture – especially if you are in Australia.

- How does it tie in with your SIEM/SOAR and case management.

- Licensing – what are the costs, especially if you’re a call centre or finance team.

- Consider a free trial to see how well it does the job before you commit.

The big players are now partnering up with specialist detectors to detect manipulated voice, video and images in near real time.

These solutions are designed to pick up AI generated voices and synthetic media. Use this kind of info as a starting point, but still make sure it fits with your system and data requirements, and always do your due diligence before integrating any third-party tools.\

People and training

- Run targeted drills for Finance, EAs and Service Desk. Not just email phishing, you could also use voice-cloned CEO calls and live video puppets to build awareness among staff about how to spot and deal with deepfakes.

- Give staff micro playbooks at point of work with call-back scripts, and snippets for Slack or Teams, and an escalation shortcut.

- Track metrics – verification rate, time-to-verify and how well they stick to the escalation rules.

Testing and exercises to run

- Purple-team scenarios – like a blended email + chat + live call. For example, a simulated phishing email with a piece of manipulated video content to help the team quickly spot the deepfake elements and improve their detection protocols.

- Tabletops – finance, legal and comms get together to decide when to freeze payments, when to brief executives and when to go public.

- What ‘good’ looks like – approvals checked, evidence captured, platform abuse channels used, and recovery comms aligned.

Incident response for suspected deepfakes

Intake and triage

Classify by channel, monetisable risk, reputational risk, type of malicious content, and how much damage it could do to the organisation.

Containment

- Freeze approvals and stop outbound payments.

- Invalidate sessions and rotate credentials where impersonation led to clicks or logins.

- Get the takedown report to the platforms or eSafety as needed. The eSafety Commissioner has shown a willingness to escalate to court where necessary.

Forensics and preservation

Keep the originals, the metadata, the call logs and chat transcripts with a clear chain of custody. If there’s provenance metadata available, preserve that too; some platforms strip it, which in itself can be a bad sign. Gather insights from the preserved evidence to understand the power and effect of deepfakes.

Legal and PR

Get with counsel on when to notify, potential defamation exposure, and privacy duties. Get messaging right so staff and customers know how to verify future comms.

Governance for Australia: policy, privacy, misinformation and suppliers

Policy language

Define synthetic media, prohibited uses, and required verification steps for approvals and resets. Make sure you have a plan in place to retain evidence for suspected incidents. And don’t forget to remind people of the importance of using synthetic media in a thoughtful and ethical way to avoid privacy violations or deception.

Privacy and consent

When you record calls or use biometric verification, it’s crucial to plan for compliance with the Australian Privacy Principles in mind.

In particular take the time to think about: only collecting what you need to (APP 3), letting people know at or before you collect their data (APP 5), and keeping use and disclosure to the reasons you first stated (APP 6), all this while making sure privacy principles are always being kept top of mind.

Supplier clauses

Ask your media and marketing partners to get behind Content Credentials where they can, let people know if you’re using synthetic media, and be willing to work with takedowns. Keep in mind that at the moment, platform provenance is still not that reliable in practice.

Don’t Get Faked Out

Deepfakes aren’t just a tech headache… they’re a direct assault on trust, eroding confidence in communications and decisions across Australian organisations.

As AI makes these fabrications cheaper and more convincing, the risks of fraud, reputational damage, and operational chaos skyrocket, with scam losses already hitting $119 million in early 2025 alone.

But here’s the empowering truth: By reframing deepfakes as a verification challenge first and a technological one second, you can blend human intuition with smart tools for leveled-up resilience.

At TechBrain, we’ve seen firsthand how tightening approvals and resets, training frontline teams like finance and IT on real-world simulations, and meticulously preserving evidence can slash vulnerabilities fast; turning potential disasters into dodged bullets.

FAQs

Can you reliably detect deepfakes on a live phone call

Sort of. Use real-time analysis to flag up any red flags, then confirm through a call-back and MFA before taking action.

Do watermarks and provenance like C2PA help today

They do, but only when they are kept end-to-end. Many platforms still strip or hide them. Treat them as helpful extras, not the whole package.

Which finance controls stop voice-cloned CEO scams

Dual approval, call-backs to a number you know can be trusted, no approvals by voice alone, and SLAs that give you time to verify.

How do we train without creating fear

Make it role-based and practical. Give people scripts, show them the escalation path and make it worthwhile for them to verify over getting it done fast.

What evidence should we keep for takedowns or legal action

Keep all the originals including headers and metadata, call logs, chat transcripts, timestamps and any captured provenance and if you’re dealing with explicit synthetic imagery, eSafety can help with complaint pathways and has even taken matters to court.

Sources and further reading

- ACSC Annual Cyber Threat Report 2023–24: case of AI-enabled social engineering, voice imitation and vishing. Cyber.gov.au

- ACCC National Anti-Scam Centre Targeting Scams 2024: lost $2.03b reported losses. ACCC

- ACSC’s Essential Eight – where your cyber defences need to be at. cyber.gov.au