In this article

Artificial intelligence is now at the heart of both cyber-defence and cyber-crime.

A single large-language model (LLM) can triage billions of log data entries in seconds to find a threat, yet the same technology can also draft a perfect email impersonating a trusted local supplier, complete with correct project references and a convincing reason for an urgent change in their BSB and account numbers.

For CIOs, CISOs, IT managers and business owners, that duality is the new battleground.

We get it. If you’re a CIO/CISO, you need to translate these amorphous threats into a concrete risk posture for your board. If you’re an IT Manager, you’re on the front lines, trying to secure new, often unsanctioned, AI tools and workflows on a finite budget. And if you’re a business leader, you’re balancing the pressure to innovate and stay competitive with the fear of a breach.

The question is the same for everyone: “How do we move forward without getting burned?”

That’s why we wrote this guide. We’ll cut through the noise and get beyond the warnings. Think of this as a strategic conversation to frame the opportunities and risks AI presents in the cyber security landscape.

Here’s the practical roadmap we’ll follow:

- How AI has altered the economics of attack and defence.

- The five most damaging ai-enabled threats, with real Australian data.

- A readiness framework mapped to the Essential Eight, NIST AI-RMF, ISO/IEC 42001, and the Privacy Act.

- A multi-layered control stack – policy, technical, and human – that can be implemented without derailing innovation.

- Where a Managed Service Provider (MSP) adds value when internal resources are tight.

Our promise in this guide is to walk you through each of these areas clearly and concisely. The world of AI security is complex and moves fast, but it doesn’t have to be overwhelming.

By the end of this article you’ll have more than a list of threats; you’ll have a direction for assessing your unique risks and a clear, actionable idea of what to do next to pro-actively protect your organisation so you can innovate with confidence.

AI significantly reduces the level of sophistication needed for cybercriminals to operate.

– Australian Signals Directorate (ASD)

How AI Has Reshaped the Threat Landscape

To build a resilient strategy we need to understand the battlefield. AI has given both attackers and defenders powerful new tools, changing the economics of cyber conflict.

Defender Super-Powers

For security teams, AI is a powerful tool, amplifying human capabilities to enable enhanced threat detection and respond to threats at machine speed. What was once the domain of enterprise budgets is now available to the mid-market through modern security platforms.

| Defence Capability | Security Pay-off | Typical Use Case |

| Real-time anomaly detection | Flags subtle outliers that human analysts would miss. | Monitoring cloud workloads for unusual activity. |

| Behavioural analytics | Learns “normal” user and system patterns to spot drift. | Detecting insider threats or compromised accounts. |

| Autonomous response | Provides containment in seconds, not hours. | Automatically isolating a device showing signs of ransomware. |

| Predictive threat modelling | Forecasts potential breach paths and attack surfaces. | Prioritising patch management and security hardening. |

Attacker Force-Multipliers

Unfortunately these capabilities are not exclusive to defenders. AI gives attackers the ability to automate and scale attacks that were once manual and time-consuming, effectively reducing the barrier to entry for sophisticated cybercrime.

| Adversarial Capability | Criminal Pay-off | Typical Attack Scenario |

| LLM-Powered Social Engineering | Bypasses human skepticism and email security filters. | Hyper-personalised CEO fraud or payment redirection scams. |

| AI-Generated Polymorphic Code | Evades traditional signature-based antivirus and EDR. | Deployment of undetectable ransomware or spyware. |

| Automated Vulnerability Scanning | Dramatically shortens the time from flaw to exploit. | Rapidly finding and attacking newly announced or unpatched system vulnerabilities. |

| Real-time Deepfake Generation | Defeats “voice-to-verify” security controls. | Live, interactive vishing (voice phishing) calls to authorise fraudulent transactions. |

The development cycle of threats has been reduced from weeks to under 24 hours

The cost of entry for these attacks has dropped to almost zero. A stolen API key for a major AI platform, often costing less than a few dollars on dark-web markets, gives an attacker access to an almost infinite supply of malicious content generation.

Five High-Impact AI-Enabled Threats

While the theory is important, it’s the practical application of these tools that poses a direct risk to your business. Here we break down the five most critical threats and highlight key mitigation strategies.

AI-Powered Cyberattacks & Social Engineering Techniques

The classic phishing attack has been supercharged. Imagine your accounts payable team receives a Teams call. A flawless voice clone of the CFO demands an urgent payment to a supplier, followed by an email with “updated” bank details.

LLMs automate this ruse at scale, creating convincing back-stories and even spoofing entire message threads for credibility. This is the primary driver behind the significant increase in payment-redirection scams, which according to the ACCC’s latest Targeting Scams Report cost Australian businesses $152.6 million in 2024 alone, a 66% jump on the previous year..

Mitigation Highlights:

To counter this, a multi-layered approach is essential.

- Implement phishing-resistant Multi-Factor Authentication (MFA), such as FIDO2 hardware tokens, to protect credentials even if they are stolen.

- Deploy an AI-driven email security gateway that can analyse the tone and intent of a message, not just its technical headers.

- Enforce a strict “pause-and-verify” financial process, requiring staff to call back on a pre-recorded, trusted phone number before actioning any change to payment details.

Deepfakes & Voice Cloning

Deepfake videos and audio, created using generative AI by malicious actors , represent a new frontier of fraud.

It’s now possible for an attacker to splice a LinkedIn headshot onto a stock video background and have the avatar lip-sync a ChatGPT-generated script in real time. The goal is to create false but convincing evidence to authorise fraudulent actions or cause reputational damage.

In-fact the OAIC has recorded a year-on-year rise of 46% in ‘vishing’ style social engineering attacks on government agencies, headlined by the recent Scattered Spider group’s attack on Qantas.

Mitigation Highlights:

Defending against deepfakes requires moving beyond simple human verification.

- Use collaboration tools that have real-time “liveness” checks for video feeds, which can prompt users for micro-expressions to prove they are real.

- Enforce multi-channel verification for any sensitive request, where a video call must be backed up by an SMS or a call to a separate, trusted number.

- Review your executives’ online media exposure to limit the amount of high-fidelity audio available for attackers to clone.

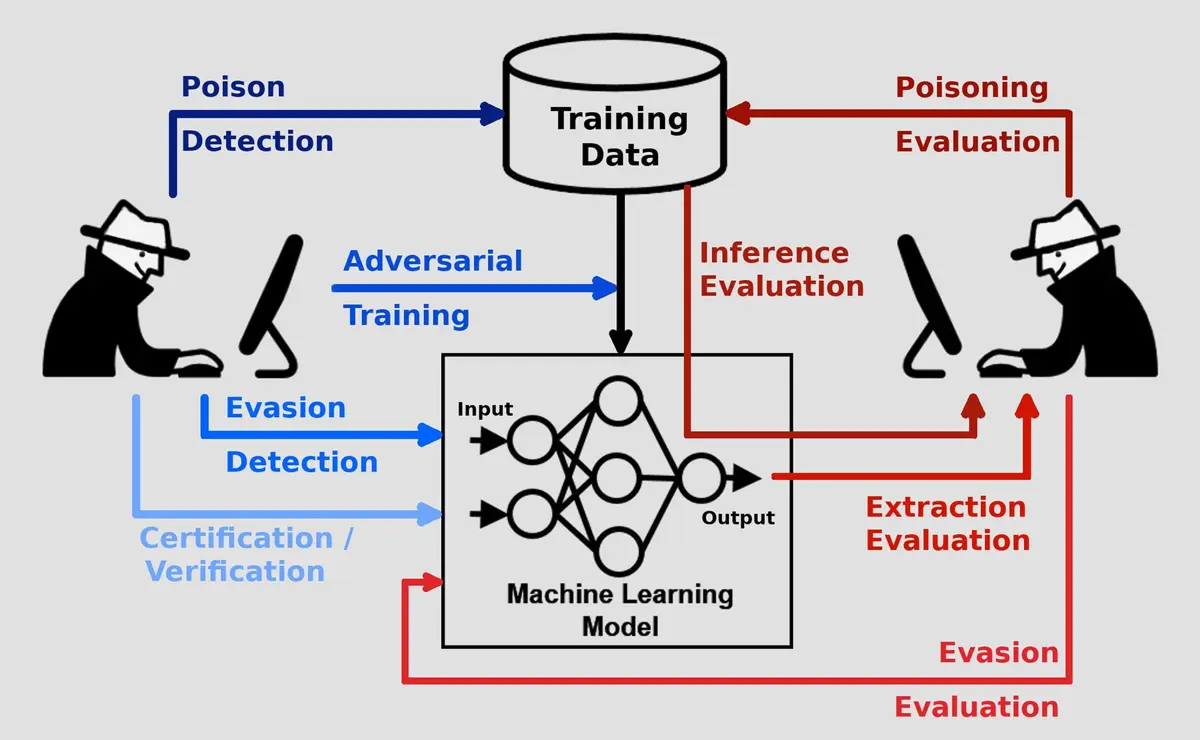

Adversarial ML & Model Poisoning

These attacks target your AI investments directly. The Australian Cyber Security Centre (ACSC) highlights risks in the AI data supply chain, maliciously modified (poisoned) data, and model drift as critical weaknesses in the AI lifecycle.

- Data Poisoning: An attacker subtly corrupts the data used to train your AI model, teaching it to misclassify malicious events as benign.

- Evasion Attacks: Malicious inputs are crafted to exploit a model’s blind spots, such as making slight pixel tweaks to an image to fool a vision-based security camera.

- Model Inversion: The attacker queries a model repeatedly to reverse-engineer and recover the private or sensitive data it was trained on.

Mitigation Highlights:

Protecting the integrity of your models is paramount.

- Maintain a detailed AI Bill of Materials (AI-BOM) that lists all datasets, data sources, labels, and model versions used in your systems.

- Use a gated retraining pipeline, where new data is verified with integrity checksums and sourced from signed, trusted locations before it’s used.

- Deploy adversarial-example detectors in your production environment, using open-source libraries like the IBM Adversarial Robustness Toolbox.

Automated Vulnerability Discovery & Exploitation

AI-powered tools, such as the LLM-enhanced project AutoSploit, are designed to autonomously discover and exploit vulnerabilities.

They can query public asset databases, fingerprint your online services and draft custom exploit code at machine speed. Cloud misconfigurations, like public S3 buckets or over-permissive IAM roles, become one-click targets.

Mitigation Highlights:

The speed of automated attacks requires an equally fast defence.

- Implement a continuous Attack Surface Management (ASM) program that tests both your external and internal exposures on a daily basis.

- Aim to patch critical CVEs in under 7 days as per the ACSC’s guidance to achieve a high maturity level.

- Deploy “canary tokens” decoy digital assets within your network that will trigger high-priority alerts if they are accessed by automated scanning tools.

Shadow AI & Governance Gaps

Sometimes the biggest threat comes from well-meaning employees. “Shadow AI” occurs when staff use unapproved, public AI tools for work tasks.Here is the paraphrased text:

For example, a marketing employee uploads a customer CSV file to a free online chatbot for “segment analysis” or a developer fine-tunes a public model using proprietary source code. Both actions breach NDAs and the Privacy Act 1988 and create data leakage risks that are invisible to IT.

Mitigation Highlights:

Strong governance is the only effective control for Shadow AI.

- Establish a clear Acceptable Use Policy for AI, explicitly stating that no customer or production data is to be entered into public LLM platforms.

- Provide a secure, managed internal sandbox (e.g., Azure OpenAI on a private network) so staff have a safe alternative and don’t default to public tools.

- Implement Data Loss Prevention (DLP) rules that can inspect outbound data and prompts for sensitive information like PII or source code.

Assessing Your AI Security Readiness

To move from awareness to action you need to quantify your organisation’s specific risk profile. This involves mapping threats to business impact and understanding your obligations under a comprehensive risk management framework and key Australian frameworks.

AI Risk Matrix

A risk matrix helps you prioritise. We recommend clients complete this exercise for each business unit and identify the most likely and most impactful threats to their business.

| Likelihood | Impact | Example Threat | Recommended Control |

| High | Medium | LLM-crafted spear-phish targeting finance team. | Phishing-resistant MFA (FIDO2); secure email gateway; user training. |

| Medium | High | Deepfake video call authorising a fraudulent payment. | Out-of-band call-back procedure for payment changes; transaction anomaly detection. |

| Low | High | Poisoned training data crippling a fraud-detection model. | AI-BOM; gated retraining pipeline; data-integrity monitoring. |

Compliance & Framework Mapping

Navigating the compliance maze can be overwhelming. As trusted advisors we recommend a layered approach. Treat these frameworks as nested rings of security: start with the Australian baseline, then overlay AI-specific governance.

| Framework / Law | Why it Matters for AI | Minimum Actions |

| ACSC Essential Eight | Baseline cyber hygiene | Patch ≤ 14 days (ML2), block macros, enforce MFA |

| ISO 31000:2018 – Risk Management ISO | “Gold-standard” principles that underpin all later ISO security standards | Adopt its risk methodology, link AI register to enterprise risk log |

| ISO/IEC 27001:2022 – ISMS ISO | Globally recognised information-security benchmark | Align AI controls (asset mgmt., access, crypto) to ISMS clauses 5-10 |

| ISO/IEC 42001 (draft) | First certifiable AI-governance standard | Gap-analyse policies; prepare evidence for future audit |

| NIST AI Risk-Mgmt Framework 1.0 | Granular AI-risk functions (Map, Measure, Manage, Govern) | Use for threat modelling and control selection |

| National Framework for the Assurance of AI in Government [ | Sets “cornerstones & practices” for safe, ethical AI across all gov’t levels | Mirror its 5 assurance cornerstones in your own governance roadmap |

| Privacy Act 1988 / Australian Consumer Law | Privacy & misleading-representation duties still apply to chatbots, LLMs, etc. | Embed privacy impact assessments; validate chatbot claims |

Australia doesn’t yet have a single ‘AI Act’ like the EU, but existing rules still bite: the Australian Consumer Law already covers misleading chatbot claims, and regulators expect organisations to build ISO-grade risk and security disciplines such as ISO 31000 for risk and ISO 27001 for information security into every AI deployment.

— Ashish Srivastava, Head of Cyber Security, TechBrain

Building AI-Resilience with an MSP Partner

Most mid-market firms can’t afford a PhD in adversarial machine learning. For businesses that lack deep in-house AI security expertise, partnering with a specialist MSP is the best way to reduce costs, enhance security and scale safely.

A partnership with TechBrain delivers:

- 24×7 AI-Native SOC: A Security Operations Centre that uses LLM-based event correlation backed by expert human analysts.

- vCISO Service: Strategic guidance to align your AI adoption with your risk appetite, budgets, and compliance obligations.

- Actionable Threat Intelligence: We translate complex threats from frameworks like MITRE ATLAS into plain English for your board.

- Economies of Scale: Access to enterprise-grade toolsets like model-integrity scanners and deepfake detection at a fraction of the direct license cost.

Innovation should give you a competitive edge, not exposure. A good MSP ensures it stays that way by strengthening your cyber security posture with guidance tailored to your business.

Future Trends to Watch

The AI security landscape is changing fast. As your strategic advisor we are tracking several key trends over the next 24 months:

- Autonomous Red-Teaming: AI systems will be used not just for defence, but to continuously and automatically test our own systems, as a permanent “sparring partner” to find weaknesses.

- Regulatory Acceleration: The Australian government will be drafting new AI-governance clauses for the upcoming Cyber Security Act amendments. We predict that demonstrating AI risk management will soon be a requirement for major contracts.

- AI Security Posture Management (AI-SPM): Expect the rise of dedicated platforms, like those for cloud security, to discover and manage the attack surface of your AI models.

See also: the National Framework for the Assurance of Artificial Intelligence in Government (link) released by the Data & Digital Ministers Meeting (June 2024). It maps practical assurance steps to Australia’s AI Ethics Principles and is a useful model for private-sector governance.

Securing an AI-Powered Future

AI is neither friend nor foe, it’s raw computational power waiting for human intent. Attackers use it for scale; defenders must use it for speed. In this arms race, only one side needs to be right once.

The answer is a proactive strategy built on governance, layered controls and continuous vigilance. Your journey should follow three simple steps:

- Understand your unique AI exposure by mapping your assets, threats and compliance drivers.

- Implement a layered defence that combines policy, technical controls and human processes.

- Monitor & Iterate continuously. Models drift and threats mutate; your security program must evolve with them.

TechBrain can take this heavy lifting off your team so you can focus on transformation, not firefighting. If you’re ready to move from awareness to action, talk to our cyber team today.

In the age of AI, a reactive posture is a failed posture. Let’s get you ahead of the curve.